Security training that works with your developers

Make security the natural result of developers’ routines. Black Duck Developer Security Training, powered by Secure Code Warrior, helps developers stop risks at the source and accelerate fixes across the SDLC and CI/CD pipelines.

Developer security training tailored for your pipeline

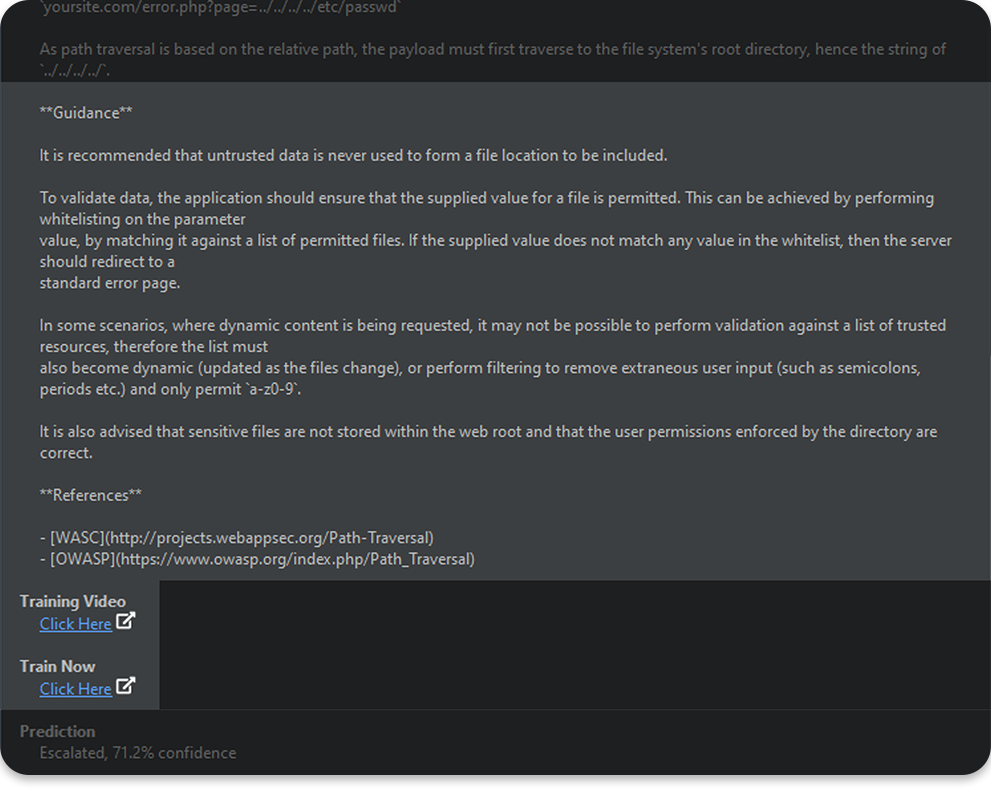

Boost developer security awareness. Provide interactive, risk-relevant training and remediation guidance that feeds directly into DevOps workflows and is tailored to your business needs.

Integrated security training for DevSecOps

Get instant, risk-relevant training and fix guidance for issues found by Black Duck’s leading AppSec tools.

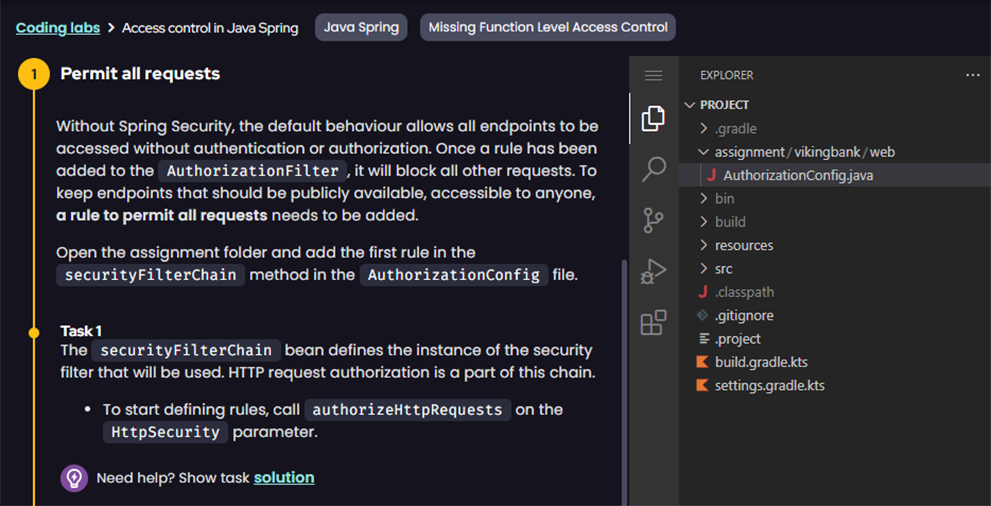

Interactive security training for developers

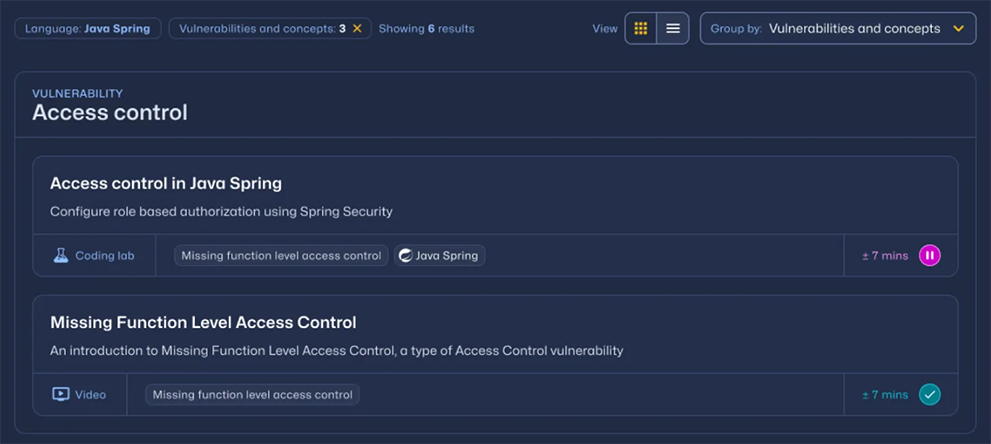

Enhance your security posture without slowing development. Get interactive application security courses, guided walkthroughs, and an expansive library of security topics tailored for diverse skill levels.

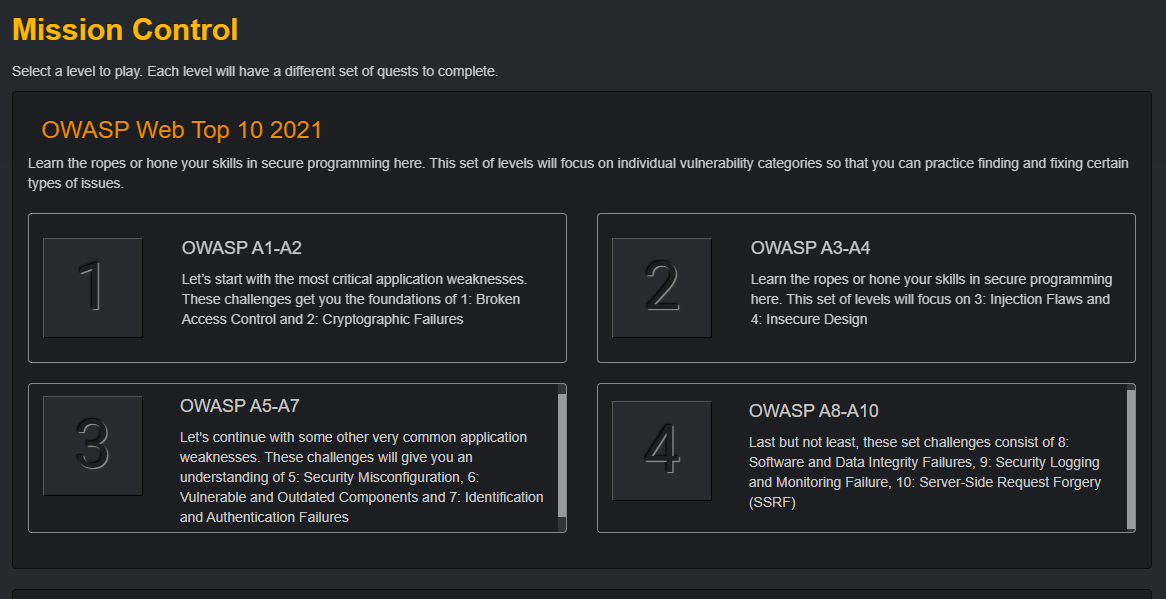

Developer security training that sticks

Engage developers with immersive training, real-world challenges, and instant feedback that turns them into security champions.

Developer security training resources

Black Duck Developer Security Training Powered by Secure Code Warrior

Developer-first security to prevent downstream risks