Subscribe to the blog newsletter

Get answers from the Community

It’s a tale as old as time, or at least as old as modern software development: the perennial tug-of-war between security and development teams. Despite advancements in tools and methodologies, many organizations still find themselves riddled with friction. Our latest research sheds light on why this issue persists, and more importantly, how it can be resolved.

Video companion: Watch the accompanying video for a deeper dive into the data discussed in this post.

The automation paradox: Speed vs. security

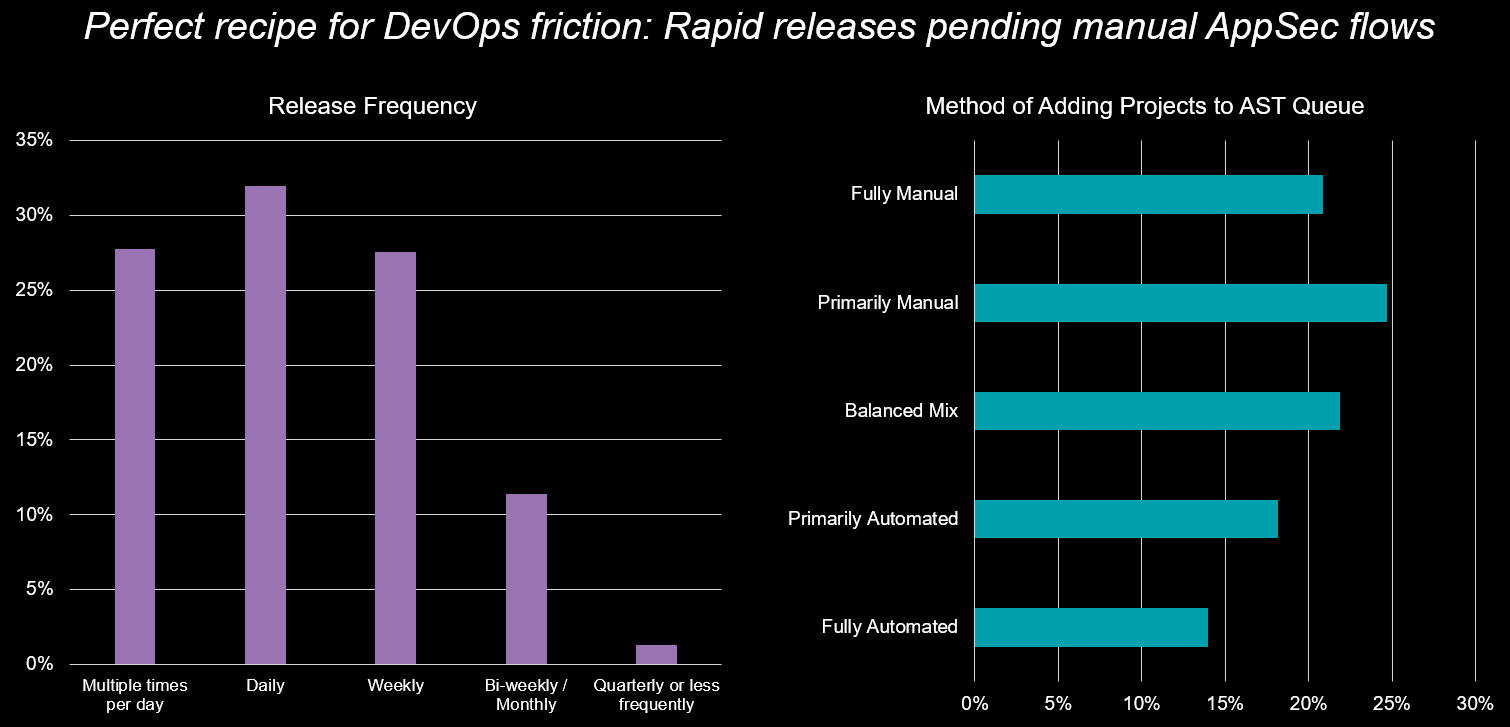

Our data reveals a striking paradox. A whopping 60% of surveyed organizations are releasing software at least daily—a testament to the agility of modern development. Yet nearly half (46%) still rely on predominantly manual processes for integrating new projects and repositories into their security testing queues. This disconnect creates a bottleneck, turning what should be a seamless flow into a series of stops and starts.

The message is clear: There's significant room for improvement, and a critical need for informed automation. This isn't just about doing things faster; it's about embedding security directly into the development life cycle, guided by your organization's unique risk tolerance and compliance standards. And as AI begins to flood developer pipelines, this need only intensifies.

AppSec as an enabler, not a hurdle

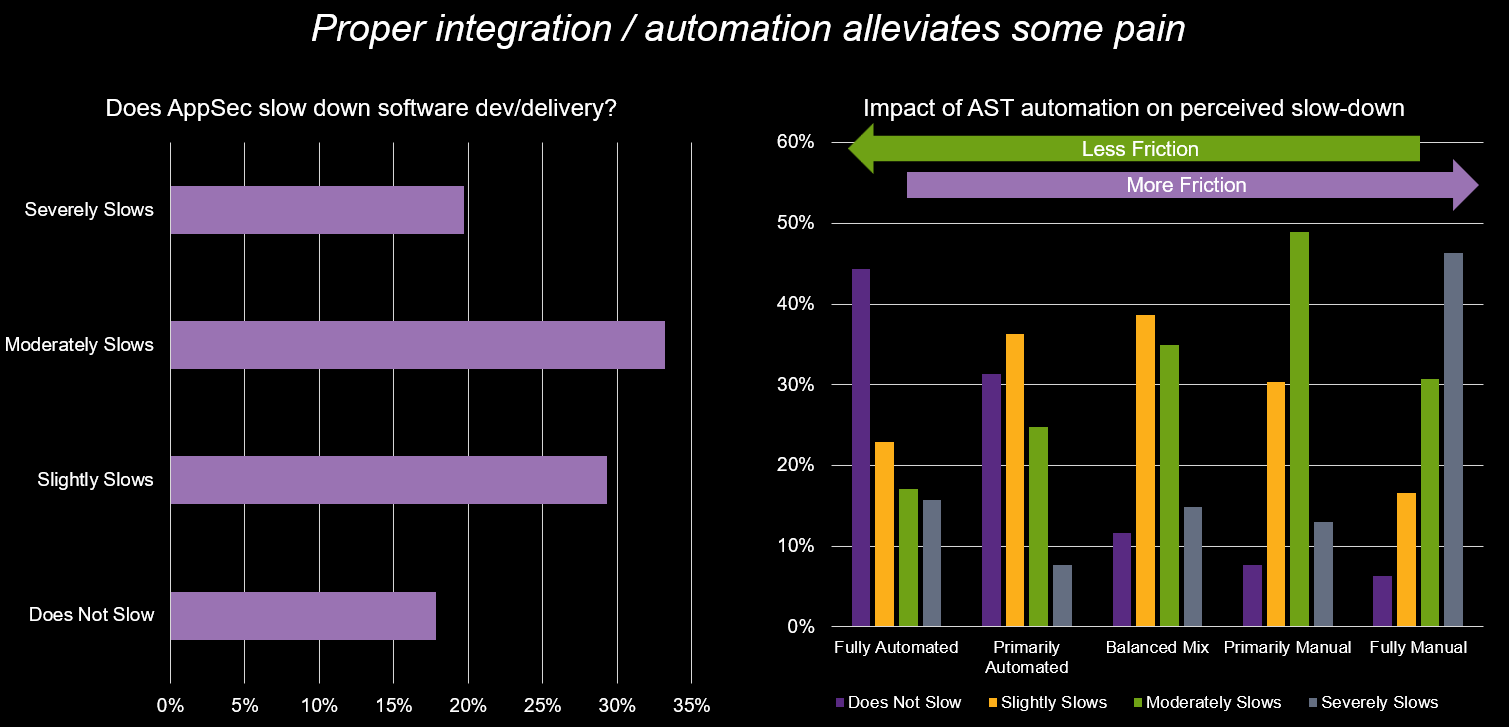

For too long, AppSec has been perceived as a hindrance. Our research confirms this, with 53% of organizations reporting that AppSec at least moderately slows down their development and release pipelines. But is this an inherent flaw in AppSec, or a symptom of how it's implemented?

When we pull back the curtains, the evidence is compelling: Using automation when detecting new code, projects, and dependencies directly reduces friction. Conversely, increased manual effort exacerbates it. This isn't groundbreaking news; it's a trend we've observed for years. So it's disheartening to see stagnation in the expansion of automation capabilities in the dev/test pipeline. Nevertheless, it’s clear that perceived pain and inconvenience can heavily influence priorities when security and development teams are required to collaborate.

The perception gap: Dev vs. security

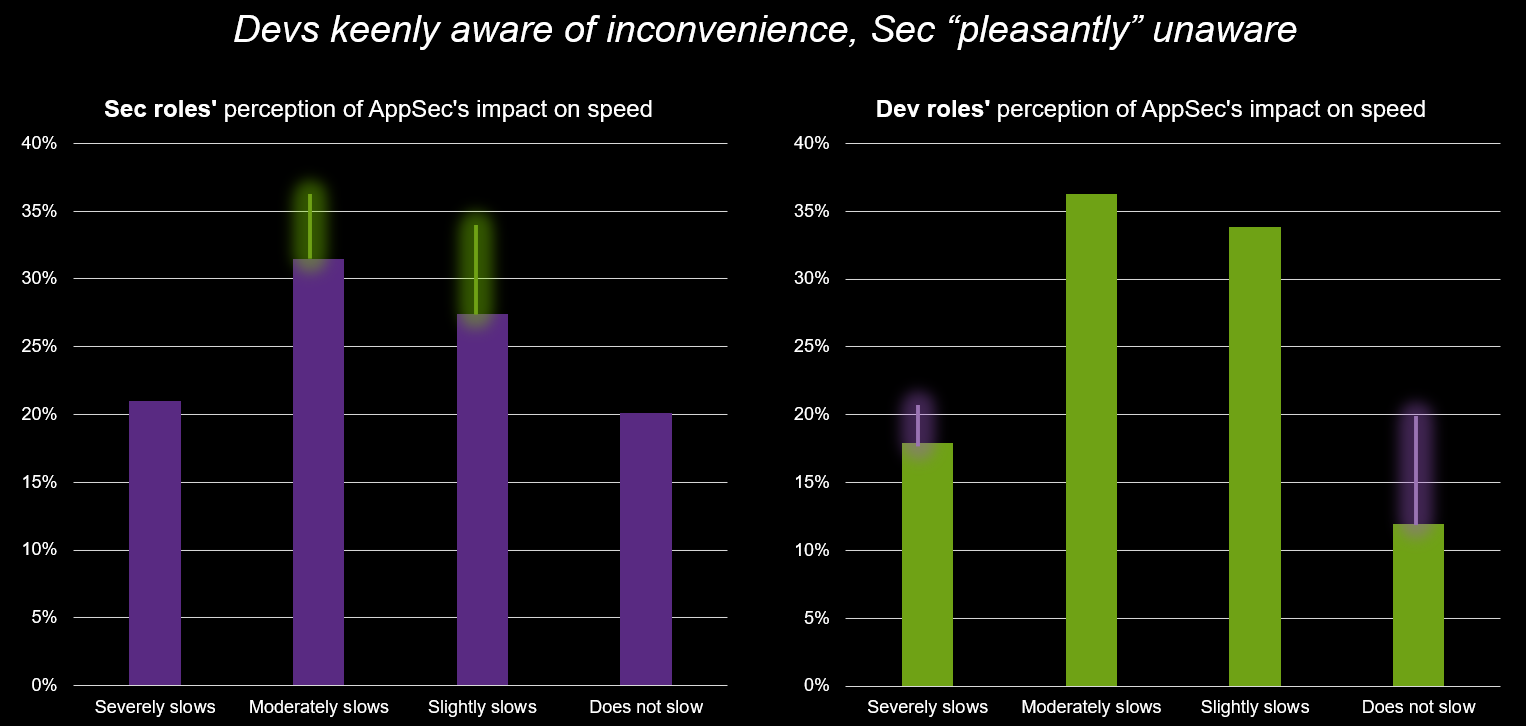

The friction point often lies in differing perceptions. Our data shows that while security professionals are somewhat split (21% feel AppSec severely slows things down, 20% feel it doesn't at all), developers have a more unified experience of impediment.

- Fifty-four percent of development professionals report moderate or severe slowdowns due to AppSec.

- A net of 88% of developers feel some kind of impact.

Compare this to security teams, 52% of which see a moderate or severe impact, and a net 79% report experiencing a slowdown. The perception of friction is undeniably higher on the development side.

The silent saboteur: Noisy test results

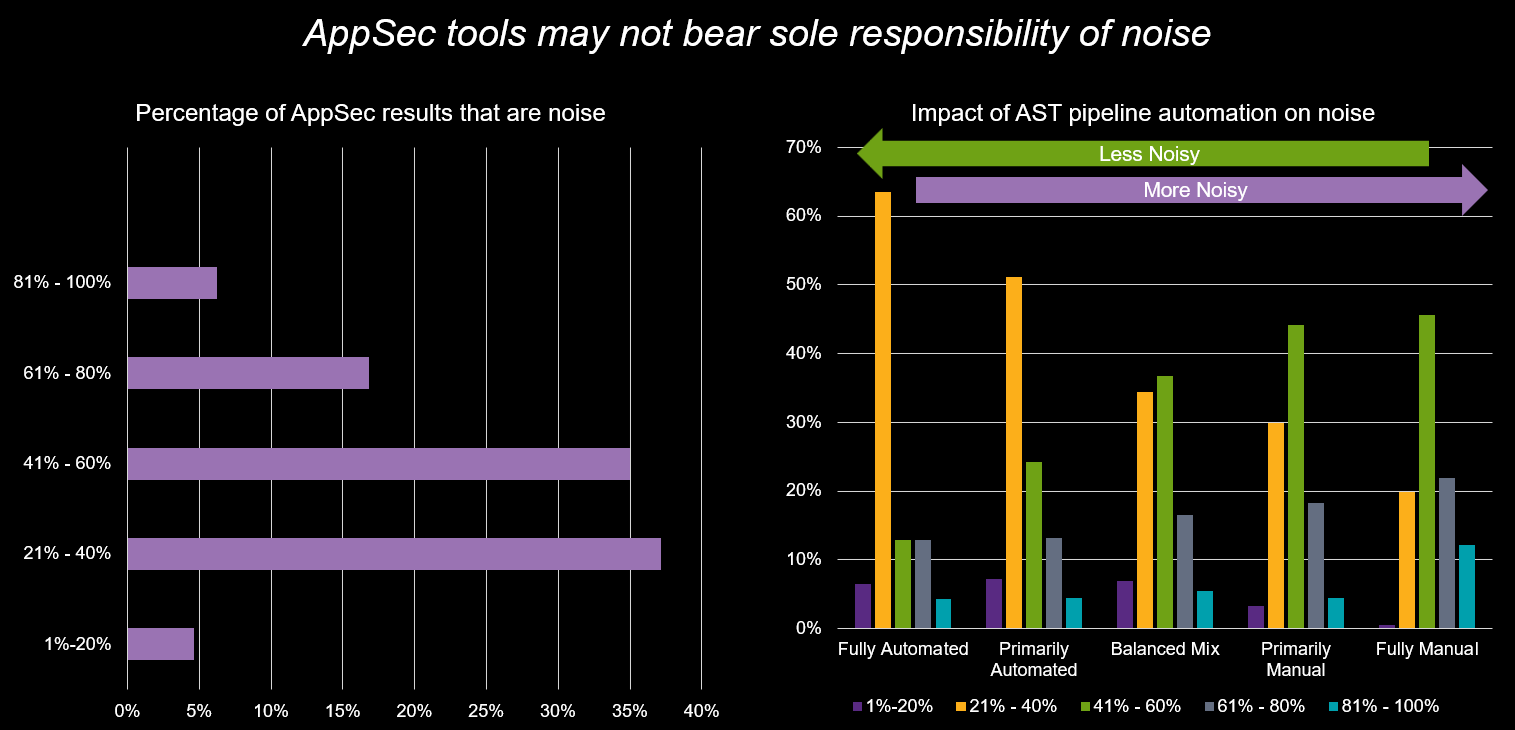

What contributes most to this perceived impact? While factors like security tests performed too late or unclear remediation paths play a role, our data points to a less obvious culprit: inaccurate security test results. These "noisy" results—redundant, incorrect, or irrelevant findings—cause distractions, consume inordinate amounts of review time, and ultimately impact the overall perception of inefficiency.

Consider this.

- Six percent of organizations report that more than 80% of their AppSec testing results are noise.

- Nearly a quarter (23%) of organizations find that over 60% of their results are noise.

This staggering amount of noise forces teams to sift through mountains of data, delaying genuine remediation efforts and eroding trust in the security process.

The right tool for the job: Automation as the solution

Are the tools themselves the issue? Are legacy testing tools ill-equipped for modern DevSecOps, especially with the influx of AI-generated code? The data says yes. Using the wrong tool for the task, or even poorly optimizing a well-suited one, directly dictates the level of noise. Our analysis clearly shows that organizations with more automation report, on average, less noise in their test results.

Key takeaways for your team

- Assess your automation: Are your tools configured correctly to enable automation, or is manual effort amplifying every misstep, making even moderate noise feel excessive?

- Beware of expansive, uninformed automation: Automation without proper, intelligent data driving it can hide noisy results, which can burden development teams with the cleanup later.

- Invest in clarity: Prioritize solutions that not only automate but also deliver accurate, actionable security insights, reducing friction and accelerating the entire development life cycle.

At Black Duck, we understand the complexities of DevSecOps. Our solutions integrate seamlessly, provide precise results, and empower teams to collaborate effectively. It’s time to move beyond the friction and build a future where security is a natural, integrated part of rapid development.

Continue Reading

AI in DevSecOps: A critical crossroads for security and risk management

Oct 08, 2025 | 6 min read

Three steps to ensuring the reliability and security of your C++ projects

Jun 03, 2025 | 3 min read

How to secure AI-generated code with DevSecOps best practices

May 08, 2025 | 3 min read

Security automation and integration can smooth AppSec friction

Jan 23, 2025 | 6 min read

Overcome AST noise to find and fix software vulnerabilities

Jan 06, 2025 | 6 min read