Subscribe to the blog newsletter

Get answers from the Community

At Black Duck, we’re seeing a rapid adoption of AI coding assistants among leading technology and software vendors. But this advancement comes with both incredible opportunities and significant challenges for security and intellectual property. Our latest research explores how organizations are approaching the inherent risks and the undeniable benefits of AI, and the critical decisions facing DevSecOps teams.

Video companion: Dive deeper into the data and insights on AI’s impact by watching the accompanying video.

The AI imperative: Adoption and oversight

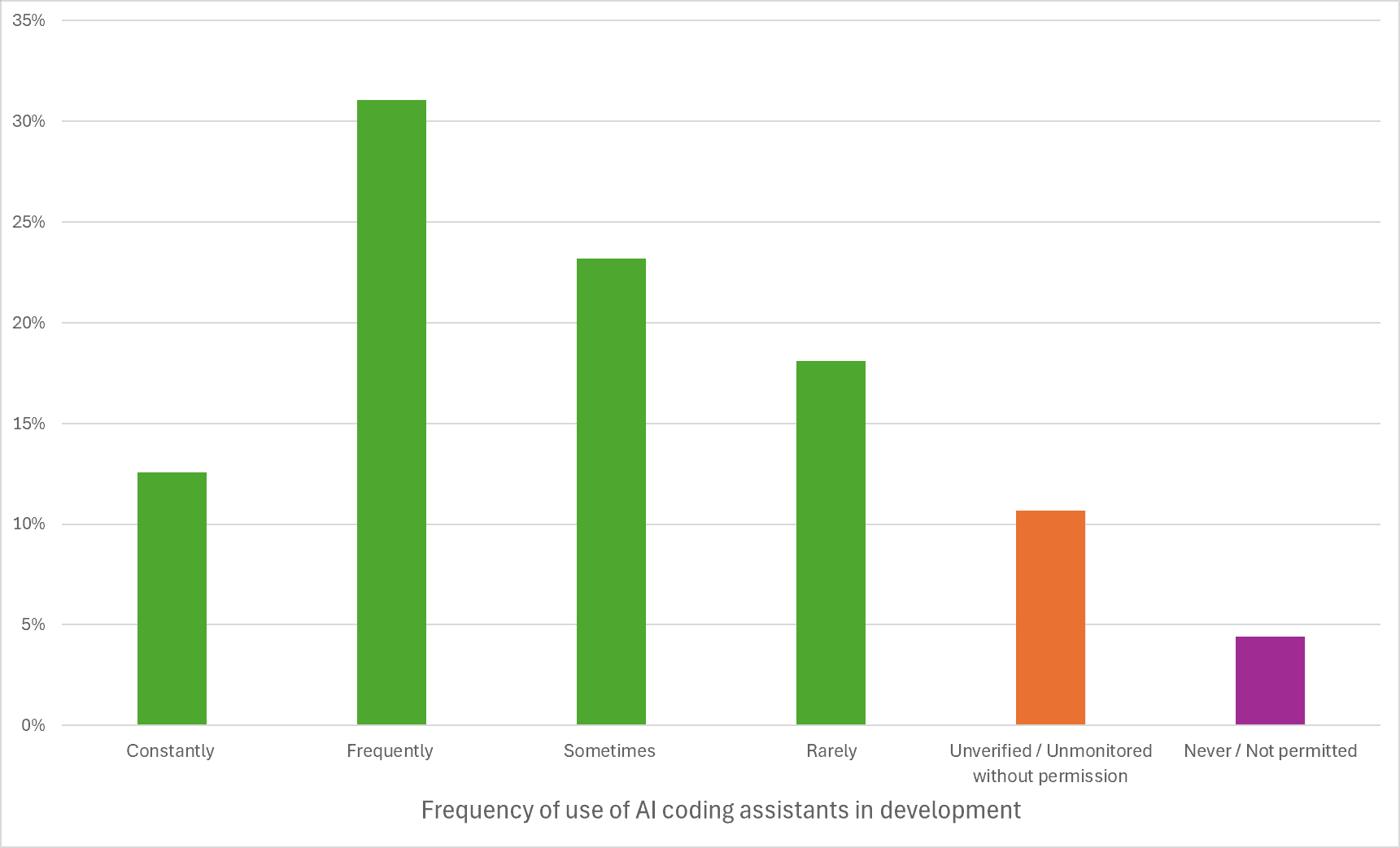

The numbers don’t lie: 85% of organizations are already leveraging AI in some development capacity. Tools like GitHub Copilot, Amazon Q, and ChatGPT are becoming commonplace for generating, modifying, and contributing code. Yet a concerning 11% of survey respondents either know or suspect AI is being used but aren’t actively monitoring its usage. This leaves organizations vulnerable to the inconsistent nature of generative AI in software development and unable to manage its adoption across contributors, teams, and the broader organization.

We know AI can “hallucinate,” and we know it’s trained on vast public datasets, including open source projects. And this means that AI can introduce new security risks and unintentionally incorporate security or license risks that are present in the third-party code upon which the AI coding assistant was trained. It can paradoxically also help developers write more-secure code or fix issues in existing projects.

The dual nature of AI: Risk vs. reward

When it comes to risks

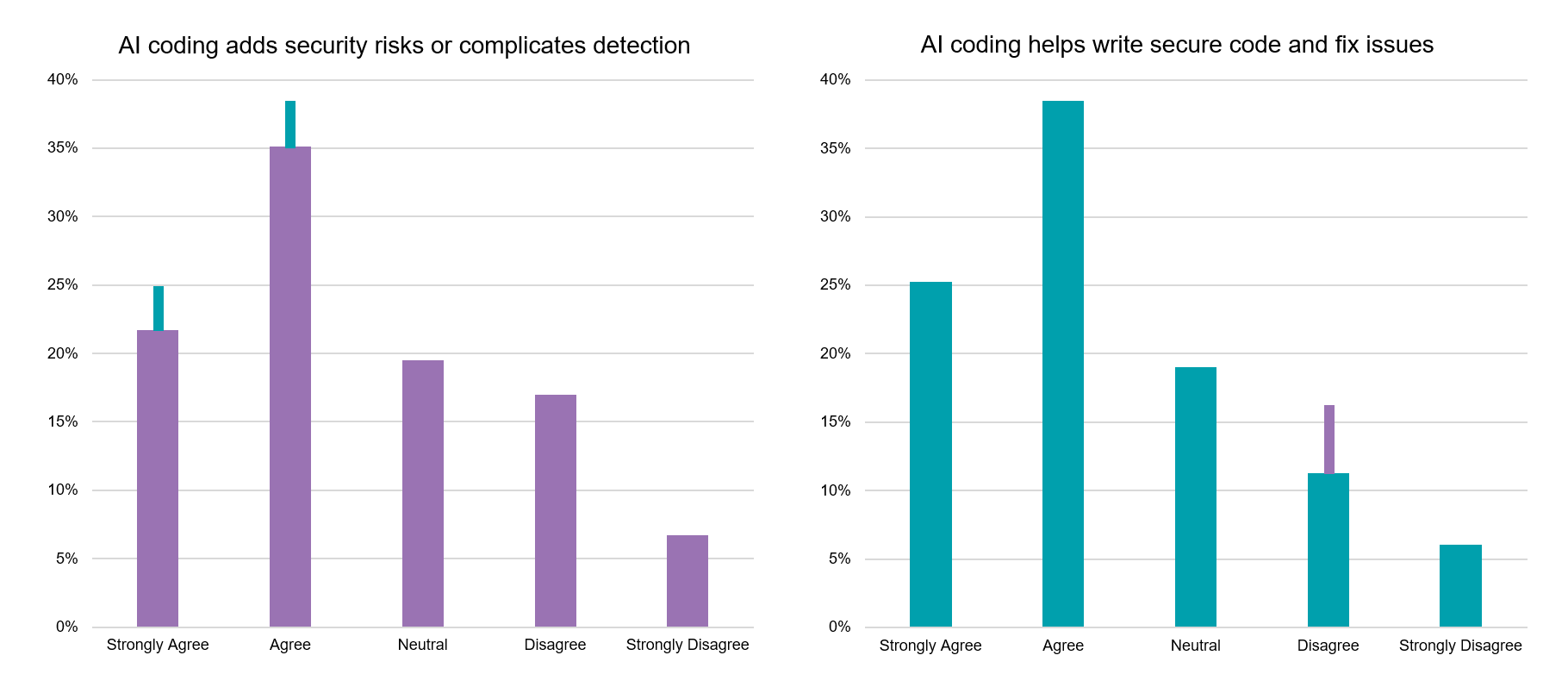

- Fifty-seven percent of organizations agree that AI coding assistants introduce security risks or make it harder to detect and identify issues.

- Interestingly, 24% disagree, perhaps believing AI only introduces the same vulnerabilities a human might, just faster.

However, the benefits are equally compelling.

- Sixty-three percent of organizations recognize that AI coding assistants can help write more-secure code or fix issues. Only 17% disagree.

Our analysis shows a slight but important skew: More organizations recognize the security benefits of AI coding assistants than primarily recognize the security risks. This suggests that the promise of AI’s assistance is a powerful driver for adoption, even while organizations recognize its potential downsides.

Confidence vs. risk perception: A deeper dive

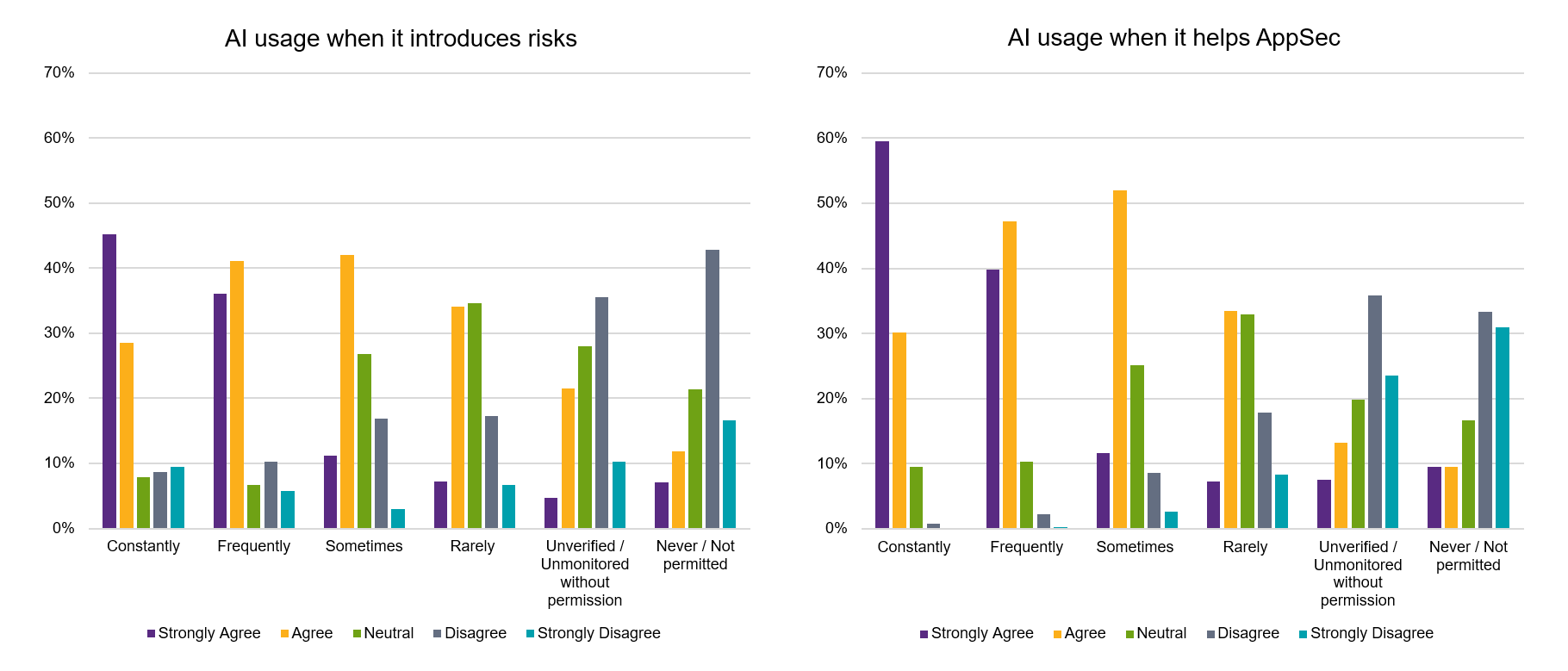

What truly drives AI adoption in development? As we saw in the prior section, our data reveals an intriguing insight: The adoption of AI is more heavily influenced by whether it yields a security benefit than by whether it introduces risks.

Those using AI frequently tend to show greater confidence in their security measures. Conversely, less-frequent AI users often communicate a distinct lack of security confidence. This doesn’t mean developers universally prioritize security. This could mean that internal support for using AI increases if it addresses AppSec concerns. This is a critical decision point for leadership and DevSecOps teams: Is AI a security tool or a development accelerator that can have security benefits?

The IP oversight: A growing concern

Beyond introducing security vulnerabilities, AI coding assistants represent a highly scalable risk to your valuable intellectual property (IP). Think about it: a semiautonomous agent opening pull requests with code snippets that originate from reciprocally licensed open source libraries. This could have serious legal and licensing implications.

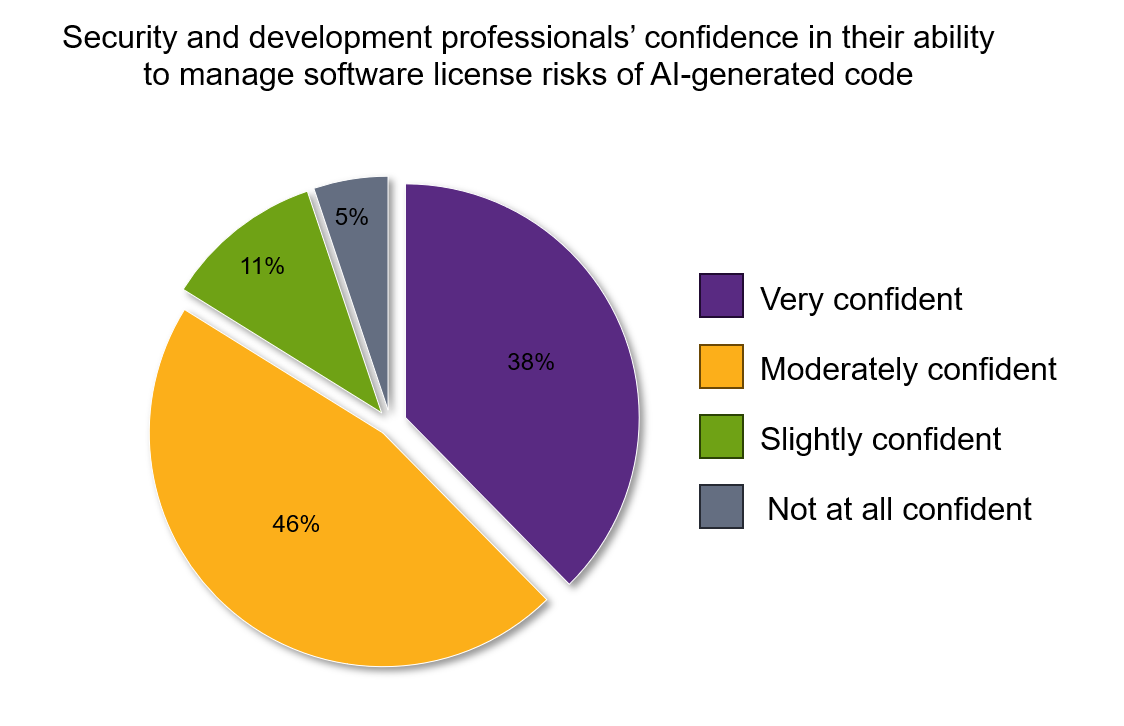

Our research, however, indicates that license compliance isn’t a primary concern for many security, development, and DevOps teams. This is a significant oversight. Given that developers are increasingly introducing third-party components and using AI trained on open source, IP risk must be on their radar.

Key takeaways for your team

- Monitor AI usage: Don’t be part of the 11% that aren’t actively monitoring AI usage. Implement mechanisms to monitor the use of AI coding tools in your development pipelines.

- Balance risk and benefit: Clearly define your organization’s stance on AI. How will you harness its benefits and mitigate its inherent risks?

- Address the IP gap: Start the dialogue about IP protection. Your organization’s value depends on keeping detrimental licenses out of your software. AppSec tools you already use can often be leveraged to identify licensed components and open source code snippets, extending the benefit of your existing investments.

At Black Duck, we provide the visibility and control necessary to embrace AI-enabled development securely and responsibly. Take steps now to understand what’s in your code, where it came from, and how it impacts your security and IP posture.

Continue Reading

Navigating the AI frontier: Risks, benefits, and uncharted territory in code development

Jan 22, 2026 | 3 min read

Bridging the divide: Why friction between dev and security persists (and how to fix it)

Dec 16, 2025 | 4 min read

AI in DevSecOps: A critical crossroads for security and risk management

Oct 08, 2025 | 6 min read

Three steps to ensuring the reliability and security of your C++ projects

Jun 03, 2025 | 3 min read

How to secure AI-generated code with DevSecOps best practices

May 08, 2025 | 3 min read